I have > ten years of software testing experience. On this page I’ll be continually outlining some of the best practices and testing approaches I’ve used to great effect.

During some recent study sessions I’ve been looking through various approaches to mind mapping to help break down complex test efforts. Here is a great example from the Ministry of Testing.

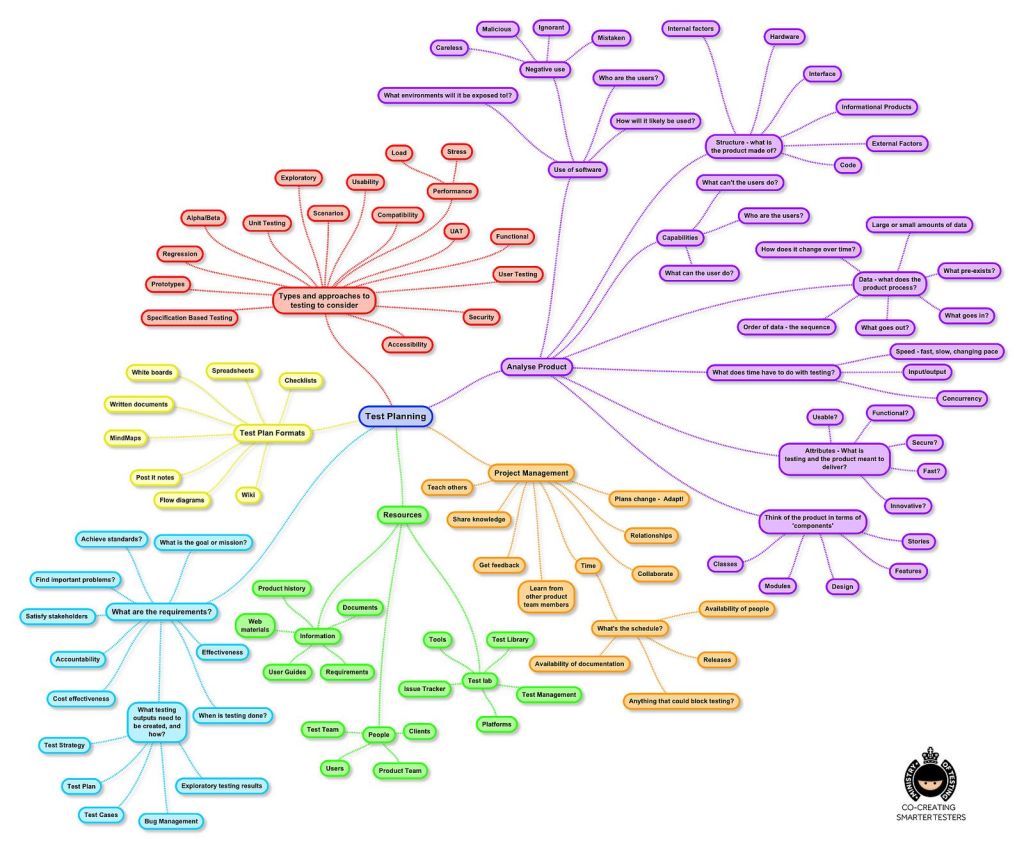

and one from findingdeefex

When creating a test plan, the following elements should be considered

- Introduction

- Describe the work or project

- Features to be tested

- Features not to be tested

- Deliverables

- Test Approach

- Pass/Fail criteria

- Environment(s)

- Tools

- Personnel

- Schedule

- Risks

- Approvals

What makes a good bug report?

- Short title/description

- Build info

- Environment

- Device type

- OS

- Browser version

- Repro steps

- skip any narrative, just bullet things out

- Actual/Expected Result

- Workaround(s), if applicable

- Screencaps/videos/log

- to demonstrate the issue and provide detailed analysis

- Crash logs if present

- Priority and/or Severity

- Though these elements are often assigned at time of triage

- Assignee

- If you already know the responsible Dev, you might just be able to directly assign.

Seven Testing Principles

- Testing shows presence of defects

- Exhaustive testing is impossible

- Bugs Bunch

- Pesticide Paradox

- Testing is context dependent

- Absence of errors fallacy

Test Case Design Strategies

- Boundary-Value Analysis

- Equivalence Partitioning

- Decision Table Testing

- State Transition Testing

- Use Case Testing

- Personas

A Short list of Test Heuristics

- Interruptions

- log off, shutdown, reboot, kill process, disconnect, sleep/hibernate, timeout, cancel

- Starvation

- CPU, memory, disk, network

- Any/all, max capacity

- Position

- beginning, middle, end

- Selection

- some, none, all

- Personas

- power user, new user, internal customer, admin

Here is my list of excellent ‘cheat sheets’ to help get the QA juices flowing

- Older but still valid .pdf from Quality Tree/Test Obsessed here!

- Here’s another one, Michael Hunter’s ‘You Are Not Done Yet’ checklist

- James Bach’s Heuristic Test Strategy Model

- Ministry of Testing version

- Pretty good article here, How to Think Like a Tester

Validation vs Verification

- These terms are often conflated. Below are the definitions direct from the ISTQB.

- Validation

- “Confirmation by examination and through provision of objective evidence that the requirements for a specific intended use or application have been fulfilled.”

- Is the spec met?

- Often stated as, “Did we build the right product?”

- The actual testing of the software

- Verification

- “Confirmation by examination and through provision of objective evidence that specified requirements have been fulfilled.”

- Starts with ‘testing the spec’, looking thru design docs, etc..,

- Often stated as, “Did we build the product right?”

- Validation

QA Metrics

- Defect Leakage, aka Leak Rate

- (No. of Defects found in UAT / No. of Defects found in QA testing.)

- Rework Effort Ratio

- (Actual rework efforts spent in that phase/ total actual efforts spent in that phase) X 100

- Requirement Creep

- ( Total number of requirements added/No of initial requirements)X100

- Schedule Variance

- (Actual Date of Delivery – Planned Date of Delivery)

- Cost of finding a defect in testing

- ( Total effort spent on testing/ defects found in testing)

- Schedule slippage

- (Actual end date – Estimated end date) / (Planned End Date – Planned Start Date) X 100

- Passed Test Cases Percentage

- (Number of Passed Tests/Total number of tests executed) X 100

- Failed Test Cases Percentage

- (Number of Failed Tests/Total number of tests executed) X 100

- Blocked Test Cases Percentage

- (Number of Blocked Tests/Total number of tests executed) X 100

- Fixed Defects Percentage

- (Defects Fixed/Defects Reported) X 100

- Accepted Defects Percentage

- (Defects Accepted as Valid by Dev Team /Total Defects Reported) X 100

- Defects Deferred Percentage

- (Defects deferred for future releases /Total Defects Reported) X 100

- Critical Defects Percentage

- (Critical Defects / Total Defects Reported) X 100

- Average time for a development team to repair defects

- (Total time taken for bugfixes/Number of bugs)

- Number of tests run per time period

- Number of tests run/Total time

- Test design efficiency

- Number of tests designed /Total time

- Test review efficiency

- Number of tests reviewed /Total time

- Bug find rate or Number of defects per test hour

- Total number of defects/Total number of test hours

References:

- https://www.invensis.net/blog/it/software-test-design-techniques-static-and-dynamic-testing

- https://www.guru99.com/equivalence-partitioning-boundary-value-analysis.html

- https://conorfi.com/software-testing/software_testing_heuristics

- https://www.softwaretestinghelp.com/how-to-write-good-bug-report

- https://www.guru99.com/software-testing-metrics-complete-tutorial.html

- https://glossary.istqb.org/en_US/search